Understanding the Historical Milestones of Integrated Circuits

Integrated circuits stand among the most important inventions in human history. They have revolutionized the development of modern electronics technology, enabling advancements that define the digital age. The invention of the integrated circuit in the late 1950s marked a pivotal milestone in electronic engineering. This innovation laid the foundation for technological miniaturization, transforming bulky machines into compact devices like smartphones and microchips. The semiconductor industry, driven by IC production, has fueled global economic growth and connected billions through telecommunications.

Integrated circuits have reshaped industries and daily life. Their impact spans technological, economic, and social dimensions, as shown below:

Key Impact Areas | Description |

|---|---|

Technological Miniaturization | Enabled the development of smaller, more portable devices, from smartphones to wearable tech. |

Economic Growth | Semiconductor industry has become a major component of the global economy, creating millions of jobs. |

Enhancement of Telecommunications | Fundamental to the operation of modern telecommunications equipment, connecting billions globally. |

The ic integrated circuit definition extends beyond electronics. It represents a cornerstone of innovation, driving progress in computing, healthcare, and artificial intelligence. Its influence continues to shape the future of technology.

Key Takeaways

Integrated circuits changed electronics by making smaller devices like phones.

The semiconductor industry, powered by these circuits, boosts jobs and the economy.

Inventors like Jack Kilby and Robert Noyce helped create these circuits.

Moore's Law says chips double their power every two years.

Today, challenges include heat and eco-friendliness, leading to greener methods.

Early History of the Integrated Circuit

The Era of Vacuum Tubes

The journey from transistors to integrated circuits began with the era of vacuum tubes. These glass tubes, which controlled the flow of electrons, were essential components in early electronic devices. However, they presented significant challenges. Their large size made devices bulky and difficult to transport. Heat generation was another major issue, as vacuum tubes consumed substantial power and often overheated. Reliability also posed a problem, with tubes frequently burning out and requiring replacement. These limitations hindered the advancement of electronics and prompted engineers to search for a more efficient solution.

The transition from vacuum tubes to transistors marked a turning point. Transistors offered a smaller, more reliable alternative, but the need for further miniaturization and integration remained. This quest laid the groundwork for the development of the integrated circuit.

Early Proposals and Concepts

Werner Jacobi's 1949 Patent for an Integrated Amplifier

In 1949, German engineer Werner Jacobi filed a groundbreaking patent for a semiconductor amplifier. His design featured five transistors arranged in a three-stage amplifier circuit. This innovation aimed to miniaturize devices like hearing aids and reduce production costs. Although Jacobi's invention did not achieve commercial success, it played a pivotal role in the history of the integrated circuit. His work demonstrated the potential for combining multiple transistors into a single device, paving the way for future advancements in electronics.

Geoffrey Dummer's 1952 Concept of an Integrated Circuit

In 1952, British engineer Geoffrey Dummer introduced a visionary concept that foreshadowed the modern integrated circuit. He proposed the idea of creating a solid block of electronic components without connecting wires. As Dummer described, "With the advent of the transistor and the work in semiconductors generally, it seems now possible to envisage electronics equipment in a solid block with no connecting wires. The block may consist of layers of insulating, conducting, rectifying and amplifying materials, the electronic functions being connected directly by cutting out areas of the various layers." Although Dummer's concept remained theoretical, it highlighted the potential for integrating multiple electronic functions into a single unit, inspiring future innovators.

The early history of the integrated circuit reflects a period of experimentation and vision. Engineers like Jacobi and Dummer laid the foundation for the revolutionary shift from transistors to integrated circuits, setting the stage for the technological breakthroughs that followed.

The Invention of the Integrated Circuit

First Integrated Circuit Invented by Jack Kilby

In 1958, Jack Kilby demonstrated the first working IC, marking a groundbreaking moment in the history of the integrated circuit. At Texas Instruments, Kilby faced significant challenges while developing this invention. He needed to miniaturize electronic components and integrate various circuit elements into a single semiconductor material. Despite these obstacles, Kilby successfully created a practical prototype that showcased the feasibility of his concept.

Kilby's prototype used germanium as the semiconductor material. This choice allowed him to integrate multiple circuit elements onto a single chip, leading to the creation of the first integrated circuit.

Evidence | Explanation |

|---|---|

Germanium used in Kilby's prototype | Enabled the integration of various circuit elements on a single semiconductor material, leading to the creation of the first integrated circuit. |

This invention laid the foundation for modern electronics, proving that circuits could be compact and efficient.

Robert Noyce and the Monolithic IC

In 1959, Robert Noyce introduced the first planar integrated circuit, revolutionizing the semiconductor industry. His planar process involved several steps:

Coating a silicon wafer with silicon dioxide.

Etching away portions of the dioxide to create positively charged silicon regions.

Forming negatively charged silicon regions to create junction transistors.

Attaching aluminum wires and sealing openings with silicon dioxide.

This method allowed for the production of the first monolithic silicon IC chip. Unlike Kilby's germanium-based design, Noyce's silicon-based approach offered better scalability and reliability. In 1961, Noyce received the first patent for an integrated circuit, solidifying his role in the invention's history.

Collaboration and Recognition

Jack Kilby and Robert Noyce are celebrated as co-inventors of the integrated circuit. Kilby’s work at Texas Instruments and Noyce’s innovations at Fairchild Semiconductor complemented each other. Their combined efforts advanced the invention of the integrated circuit, earning them the Ballantine Medal in 1966. In 2000, Kilby received the Nobel Prize in Physics for his contributions. Although Noyce passed away in 1990, his legacy as a pioneer of the monolithic integrated circuit remains significant.

"With the advent of the transistor and the work in semiconductors generally, it seems now possible to envisage electronics equipment in a solid block with no connecting wires."

This vision, shared by early innovators, became a reality through the collaborative efforts of Kilby and Noyce.

Development and Commercialization of Integrated Circuits

Advancements in Manufacturing

Fairchild Semiconductor played a pivotal role in refining the planar process, which revolutionized the manufacturing of integrated circuits. Jay Last led the development of the first planar integrated circuit at Fairchild. Bob Noyce and Jean Hoerni addressed production challenges by introducing innovative techniques. Hoerni's creation of a silicon dioxide passivation layer protected transistor surfaces, reducing defects and improving reliability. Noyce utilized Kurt Lehovec's concept of p-n junction isolation, enabling transistors to function independently. These advancements made integrated circuits commercially viable, especially for military and space applications.

The introduction of photolithography further transformed the semiconductor industry. This technique allowed manufacturers to etch intricate patterns onto silicon wafers with precision, enabling the production of smaller and more complex circuits. These innovations laid the foundation for the widespread adoption of integrated circuits across various industries.

Legal and Industry Milestones

The history of integrated circuits includes significant legal disputes, particularly between Texas Instruments and Fairchild Semiconductor. These battles revolved around patent claims for integrated circuit technology. By 1964, Texas Instruments secured rights to several key patents, restricting Fairchild's ability to license its technology. This limitation slowed the broader production of integrated circuits, even as Fairchild's planar technology became the industry standard.

In 1966, Texas Instruments faced a major legal defeat, prompting a shift in strategy. By 1967, both companies reached a mutual agreement on patent recognition and cross-licensing. This resolution allowed the semiconductor industry to focus on innovation rather than litigation, accelerating the development and production of integrated circuits.

Adoption Across Industries

During the 1960s, integrated circuits found their first major applications in military systems. The Autonetics Division of North American Aviation invested $16 million in integrated circuits for the Minuteman II missile's control and guidance systems. This demonstrated the reliability and complexity of semiconductor integrated circuits in critical applications.

By the 1970s, advancements in manufacturing reduced costs, making integrated circuits accessible for consumer electronics. Hearing aids were among the first consumer products to use this technology. As costs continued to decline, integrated circuits became integral to televisions, radios, and other household devices. This transition marked a significant milestone in the history of integrated circuits, showcasing their versatility and impact on everyday life.

Modern Impacts and Future Trends

IC Integrated Circuit Definition in Modern Technology

Ubiquity in computers, smartphones, and IoT devices

Integrated circuits have become essential in modern technology, powering a wide range of devices. Computers rely on them for processing and memory functions, while smartphones use them to manage communication, display, and power efficiency. In the Internet of Things (IoT), integrated circuits enable smart devices to connect and share data seamlessly.

Integrated circuits play an important role in communication systems, helping to transmit data faster and more efficiently. In-home electronic devices such as TVs, refrigerators, and washing machines, microchips help control and manage functions effectively. Integrated circuits are used in medical devices such as blood pressure monitors, health monitors, and diagnostic imaging equipment to help improve the quality of health care.

Their versatility has made them a cornerstone of industries like telecommunications, healthcare, and automotive manufacturing.

Miniaturization and Moore's Law

The miniaturization of integrated circuits has transformed technology. Moore's Law, introduced by Gordon Moore in 1965, predicted that the number of transistors on a chip would double every two years. This principle has driven innovation, enabling smaller, more powerful devices. Over time, circuit density has increased, improving performance and reducing costs. Moore's Law has become a guiding principle for the semiconductor industry, pushing the boundaries of what integrated circuits can achieve.

Emerging Innovations

Advances in nanotechnology and quantum computing

Emerging technologies are reshaping the future of integrated circuits. Nanotechnology allows for the creation of smaller and more efficient components, enhancing performance. Quantum computing, another groundbreaking innovation, promises to revolutionize fields like cryptography and data analysis by processing vast amounts of information rapidly.

Neural network processing in AI applications enhances edge computing, enabling local devices to perform tasks efficiently without relying on cloud systems.

AI-driven design automates traditional processes, improving the reliability and performance of integrated circuits.

These advancements highlight the potential of integrated circuits to address complex challenges in healthcare, autonomous vehicles, and beyond.

Bio-integrated circuits and AI applications

Bio-integrated circuits represent a significant leap forward. These circuits merge with biological systems, enabling applications in health monitoring and medical diagnostics. AI applications also continue to evolve, with integrated circuits playing a key role in neural network processing. This technology supports real-time decision-making in fields like robotics and personalized medicine.

Challenges and Opportunities

Addressing heat dissipation and energy efficiency

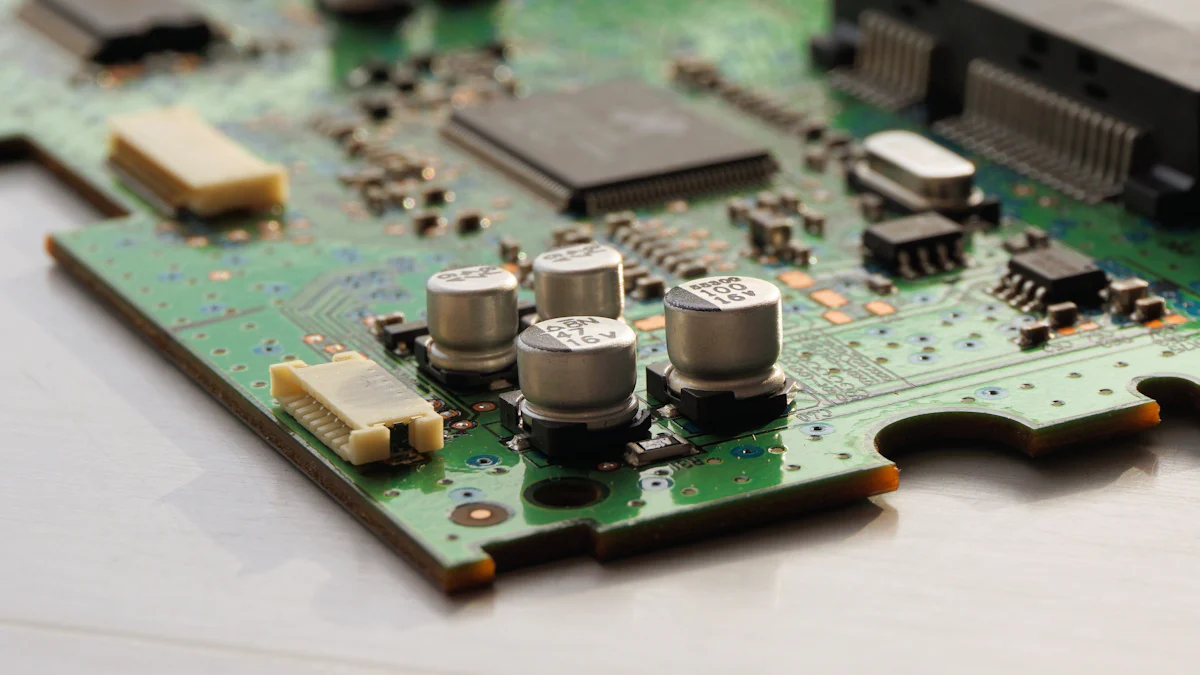

Modern integrated circuits face challenges related to heat management and energy efficiency. Densely packed components generate significant heat, requiring effective thermal solutions.

Implementing cooling systems like heat sinks to prevent hot spots.

Ensuring reliability under varying thermal conditions.

These challenges drive innovation in materials and design to maintain performance and durability.

Sustainable and eco-friendly IC production

Sustainability has become a priority in integrated circuit production. Manufacturers are adopting eco-friendly practices to reduce environmental impact.

Lead-free materials and RoHS compliance minimize health risks.

Recyclable substrates like copper and aluminum reduce resource demand.

Water-based inks and selective soldering techniques lower chemical waste.

Certifications like ISO 14001 reflect a commitment to green manufacturing.

These efforts align with global goals for sustainable development, ensuring integrated circuits remain a vital yet responsible technology.

The journey of integrated circuits highlights a series of transformative milestones. Early concepts, such as Werner Jacobi's 1949 patent and Geoffrey Dummer's 1952 vision, laid the groundwork for Jack Kilby's first IC in 1958 and Robert Noyce's silicon-based advancements in 1959. These breakthroughs revolutionized electronic engineering by combining multiple components on a single chip.

Integrated circuits enabled technological miniaturization, creating portable devices like smartphones.

They fueled global economic growth and advanced fields like artificial intelligence and healthcare.

This innovation continues to shape society, driving progress in connectivity, energy efficiency, and medical diagnostics. The future of integrated circuits promises even greater possibilities.

FAQ

What is an integrated circuit (IC)?

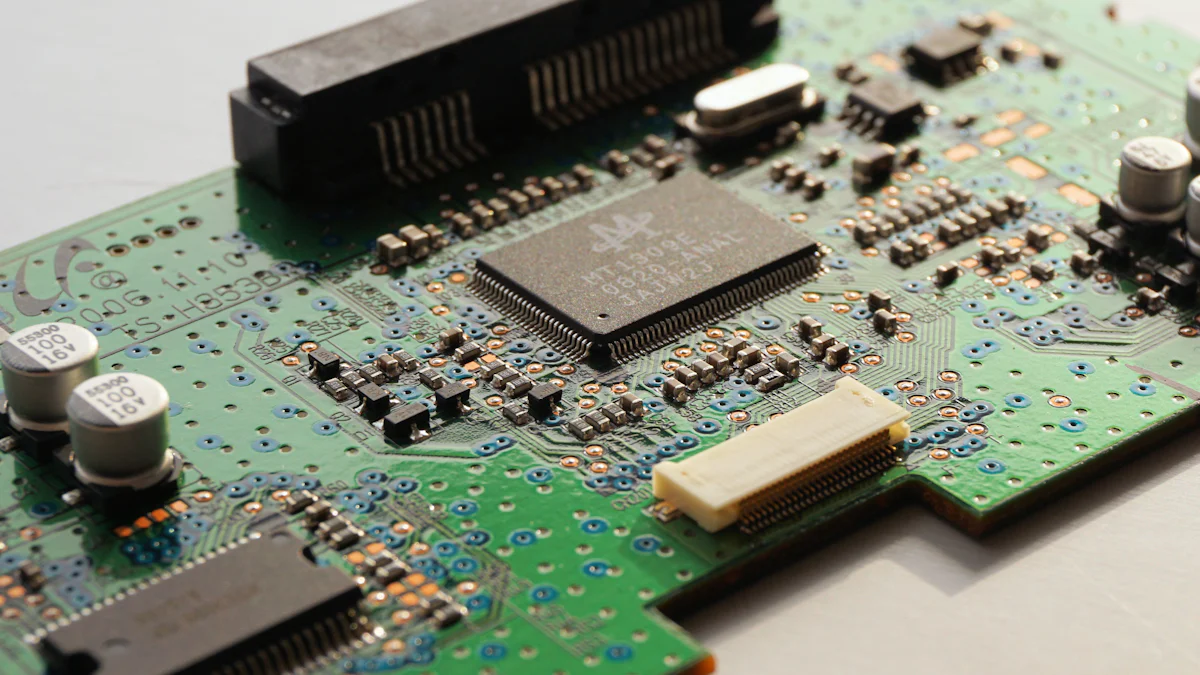

An integrated circuit (IC) is a small chip that contains multiple electronic components, such as transistors, resistors, and capacitors. These components work together to perform specific functions. ICs are essential in modern electronics, powering devices like computers, smartphones, and medical equipment.

Who invented the integrated circuit?

Jack Kilby and Robert Noyce are credited with inventing the integrated circuit. Kilby created the first working IC in 1958 using germanium. Noyce developed the silicon-based monolithic IC in 1959, which became the industry standard due to its scalability and reliability.

Why are integrated circuits important?

Integrated circuits revolutionized electronics by enabling miniaturization, improving reliability, and reducing costs. They power modern technologies, including computers, smartphones, and IoT devices. Their versatility supports advancements in fields like healthcare, telecommunications, and artificial intelligence.

How does Moore's Law relate to integrated circuits?

Moore's Law predicts that the number of transistors on an IC doubles approximately every two years. This principle drives innovation in the semiconductor industry, enabling smaller, faster, and more efficient devices over time.

What challenges do integrated circuits face today?

Modern ICs face challenges like heat dissipation, energy efficiency, and sustainability. Engineers address these issues by developing advanced cooling systems, eco-friendly materials, and innovative designs to ensure performance and reduce environmental impact.

💡 Tip: Understanding the history and evolution of integrated circuits helps appreciate their role in shaping modern technology.

See Also

Essential Circuit Board Parts Every Newbie Must Learn

Understanding The Roles Of Circuit Board Parts In Electronics

Importance Of Integrated Circuits In Today's Technology Jobs

Grasping hFE Transistor Concepts For Improved Amplifier Creation